Thousands of private conversations with ChatGPT are indexed in Google. Anthropic radically changes its privacy policy. September 2025: the month that is redefining trust in artificial intelligence.

Double Whammy Shaking the Business World

This week we have witnessed two simultaneous crises that are redefining how companies should think about artificial intelligence. It’s not just a technical problem: it’s an existential crisis for any organization that uses AI in its day-to-day operations.

FRONT 1: The ChatGPT Scandal – Thousands of Exposed Conversations

The magnitude is alarming: In August 2025, thousands of private ChatGPT conversations appeared publicly indexed on Google, Bing and other search engines. The culprit was not a hack, but a discontinued OpenAI feature called “make chats discoverable.”

How exactly did this happen?

This feature, now removed, turned private conversations into public web pages that search engines could index automatically. What users believed to be completely private became publicly accessible with a simple search.

Real cases that expose the risk:

- Users sharing full CVs with sensitive personal information

- Mental health conversations where people seek psychological help

- Confidential legal consultations treating ChatGPT as a lawyer

- Sensitive business information discussed without knowing the risks

- Personal medical data of patients consulting symptoms

Current status: Although Google has begun to limit the visibility of these conversations, many are still available on other search engines and the reputational damage has already been done.

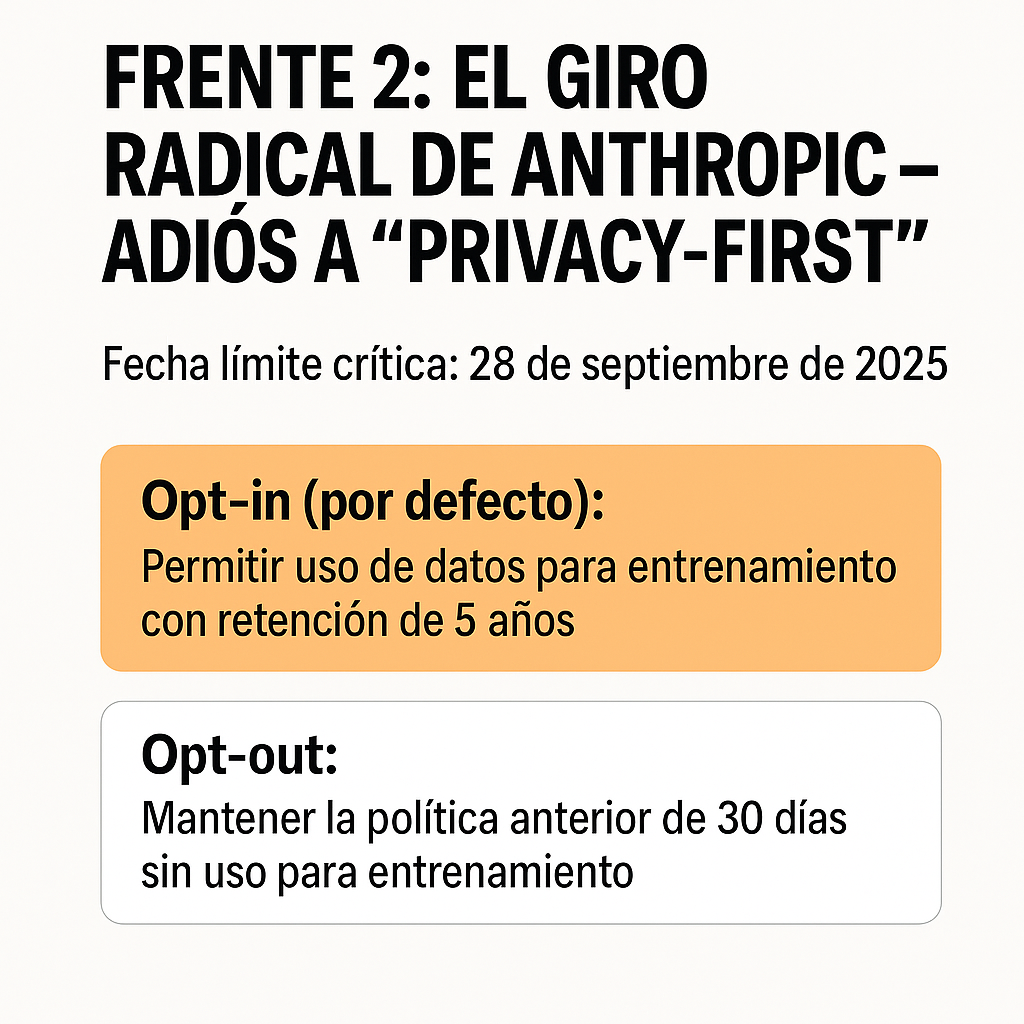

FRONT 2: Anthropic’s Radical Turnaround – Farewell to “Privacy-First”.

The most shocking change of the year: Anthropic, which positioned itself as the “privacy-first” alternative to ChatGPT, has just completely reversed its data policy.

The fundamental change:

- Before: Claude did NOT use user data to train models (30-day retention).

- Now: By default, it WILL use your conversations for training(retention up to 5 years).

Critical deadline: September 28, 2025

After this date, all consumer users must actively choose:

- Opt-in (default): Allow use of data for training with 5-year retention.

- Opt-out: Maintain the previous policy of 30 days of non-use for training.

Important note: This change does NOT affect Claude for Work, Enterprise API, or Enterprise plans – individual users only.

Business Implications Are Immediate

Legal and Compliance Risks

- Violations of confidentiality agreements with customers and partners

- Insider trading exposure that can result in lawsuits

- Non-compliance with regulations such as GDPR, HIPAA, or industry standards.

- Corporate liability for third party data breaches

Reputational Impact

- Loss of customer and investor confidence

- Competitive advantage compromised by exposed strategies

- Communication crisis difficult to manage

- Long-term damage to brand perception

Immediate Operating Costs

- Emergency audits of all IA uses

- Legal review of potentially exposed conversations

- Urgent implementation of new security policies

- Emergency training for all employees

Immediate Action Guide for Companies

URGENT ACTION – Next 48 Hours

1. Emergency Audit

- ☐ Identify who in your company is using ChatGPT and Claude.

- ☐ Check if someone has used the ChatGPT “share” function.

- ☐ Google search:

site:chat.openai.com "tu empresa"or related terms. - ☐ Document any exposures encountered.

2. Claude’s Immediate Configuration

- ☐ Access privacy settings before September 28.

- ☐ Make opt-out of data use for training.

- ☐ Verify that the configuration has been applied correctly.

- ☐ Communicate the change to all corporate users.

3. Emergency Policy

- ☐ Temporary ban on ChatGPT link sharing.

- ☐ Mandatory review before using AI with sensitive information.

- ☐ S ecure channels for confidential discussions.

MEDIUM-TERM STRATEGY – Next 2 Weeks

Corporate AI Policy

NUNCA usar IA pública para:

- Información financiera confidencial

- Datos de clientes o pacientes

- Estrategias comerciales no públicas

- Información legal privilegiada

- Datos personales de empleados

- Información bajo acuerdos de confidencialidad2. Safe Alternatives

- Claude for Work or ChatGPT Enterprise (different privacy policies)

- On-premise solutions for critical information

- Compartmentalization of information according to level of sensitivity

3. Training and Awareness

- Emergency sessions on AI privacy

- Real cases of what NOT to do

- Clear protocols for different types of information

The Current Landscape: Beyond ChatGPT and Claude

Other Recent Cases

- Grok (X/Twitter): More than 300,000 private conversations also appeared indexed in Google

- Common pattern: “sharing” functions on AI platforms are creating massive exposures

- Worrying trend: users treat AI as therapist, advocate and confidant without understanding the risks

Why Is This Happening?

- Poorly designed functions: The “sharing” options were not truly private.

- Lack of education: Users do not understand privacy implications.

- Competitive pressure: AI companies prioritize functionality over privacy

- Data monetization: Training with user data is financially valuable.

The Future of AI Privacy: What’s Next

Inevitable Trends

- More companies to follow Anthropic’s model: Training with user data will become the norm

- Stricter regulation: Europe is already preparing specific regulations for AI

- Market segmentation: “enterprise” vs. “consumer” versions with radically different policies

- Forced transparency: Companies must be clearer about data use

Predictions for 2026

- Mandatory audits of AI use in regulated sectors

- Privacy certifications as a requirement for business contracts

- Specific insurance for AI and data breach risks

- New roles: Chief AI Privacy Officer to be common in large companies

Changes in User Behavior

- Increased awareness of the risks of sharing information with AI

- Growing demand for truly private AI solutions

- Segmentation of use: public AI for general tasks, private solutions for sensitive information

Lessons Learned and Final Recommendations

For Companies

- Never assume that “private” really means private on free AI platforms.

- Invest in enterprise solutions if you handle sensitive information

- Train your team on the real risks of AI

- Develops clear policies before an incident occurs

For Individual Users

- Treats every conversation with AI as potentially public

- Read the privacy policy and understand the changes

- Use opt-out functions when available

- Consider private alternatives for sensitive information

Conclusion: The Time to Act is NOW

This is not a future crisis: it is happening in real time. The cases of ChatGPT and Anthropic’s change are just the tip of the iceberg of a fundamental transformation in how the AI industry handles privacy.

Companies that act quickly will have a competitive advantage and avoid reputational crises. Those that ignore these red flags will pay the price in the form of data exposure, legal problems and loss of trust.

The question is not whether your company will be affected by these changes. The question is: will you be prepared when your confidential information shows up in a Google search?

The time to act is now. The cost of not doing so may be irreversible.

Resources and Reference Links: